|

I am Applied Research Scientist at Adobe, working on Adobe's GenAI Firefly. Previously, I was a second year MS student in the department of Computer Science at Georgia Institute of Technology. My interests span a broad range of sub-fields in Computer Science, including Deep Learning, Machine Learning, Computer Vision and Natural Language Processing.

I have worked on various projects and internships involving Computer Vision and NLP tasks. I completed my Graduate Research under Professor Devi Parikh and Dhruv Batra where I was working on problems related to multi-modal AI.

LinkedIn / Resume / Google Scholar / Github |

|

|

|

|

|

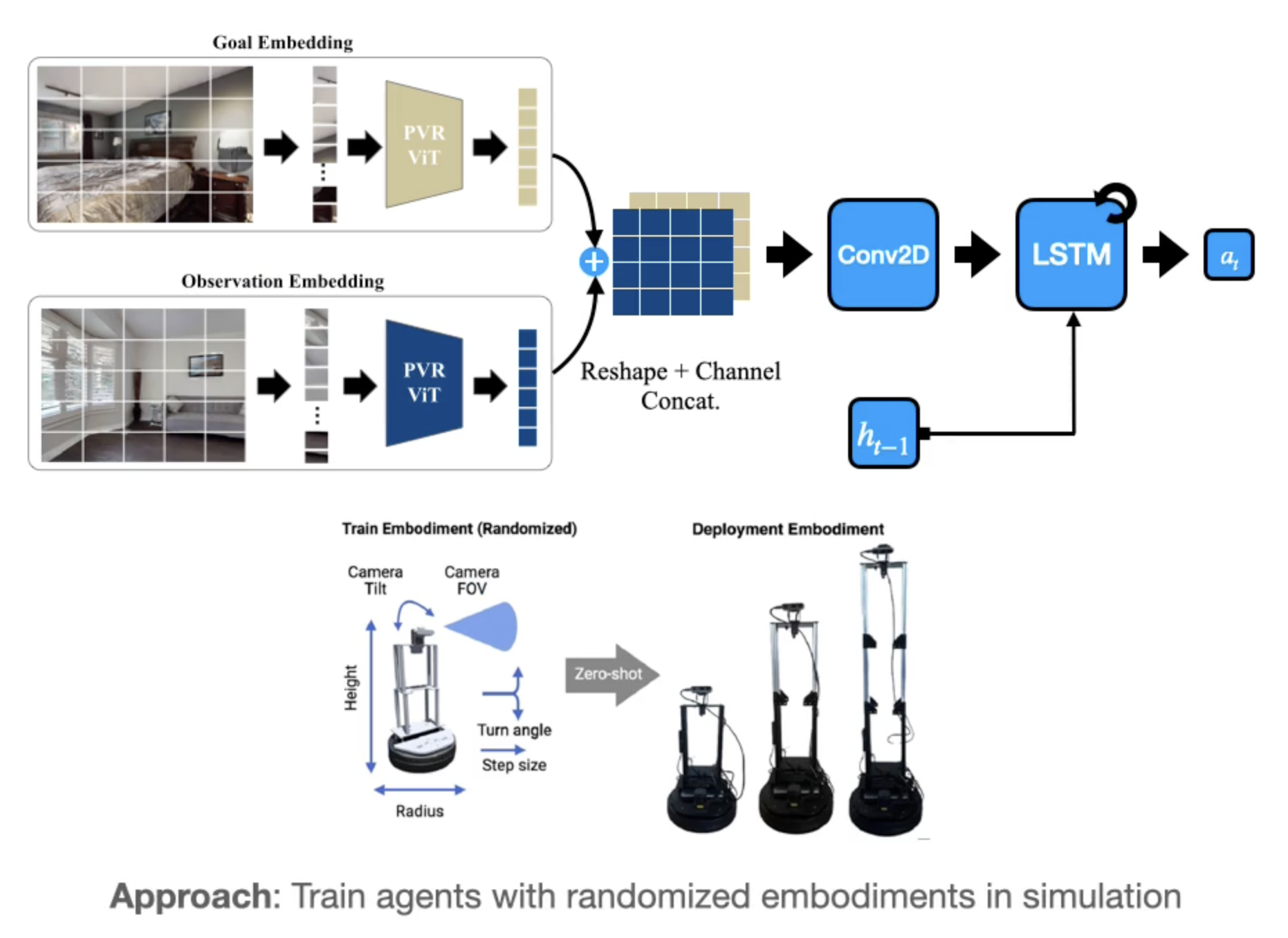

We present Embodiment Randomization, a simple, inexpensive, and intuitive technique for training robust behavior policies that can be transferred to multiple robot embodiments. |

|

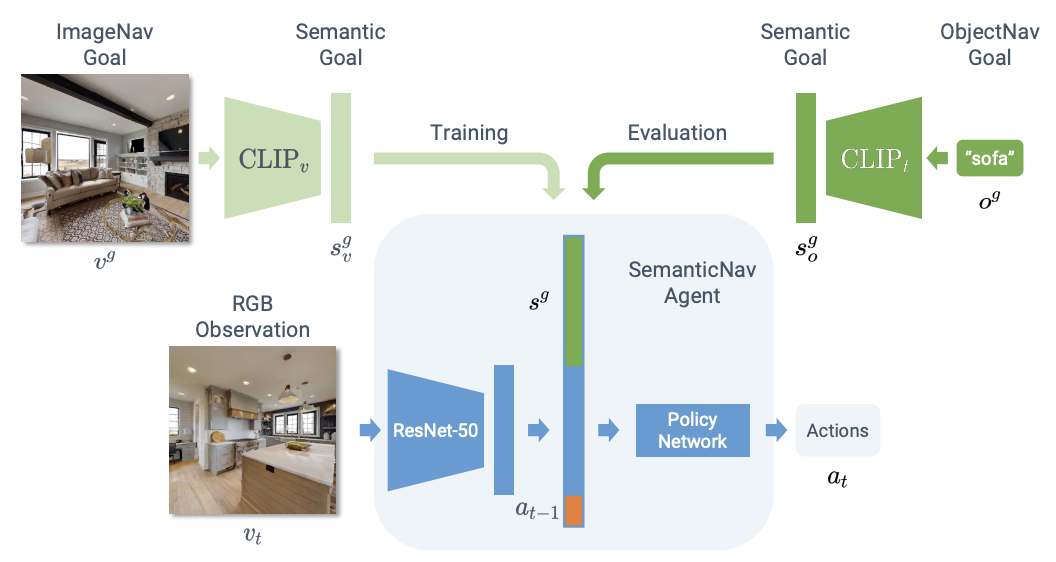

Proposed a zero-shot approach for object-goal navigation by encoding goal images into a multi-modal, semantic embedding space.

|

|

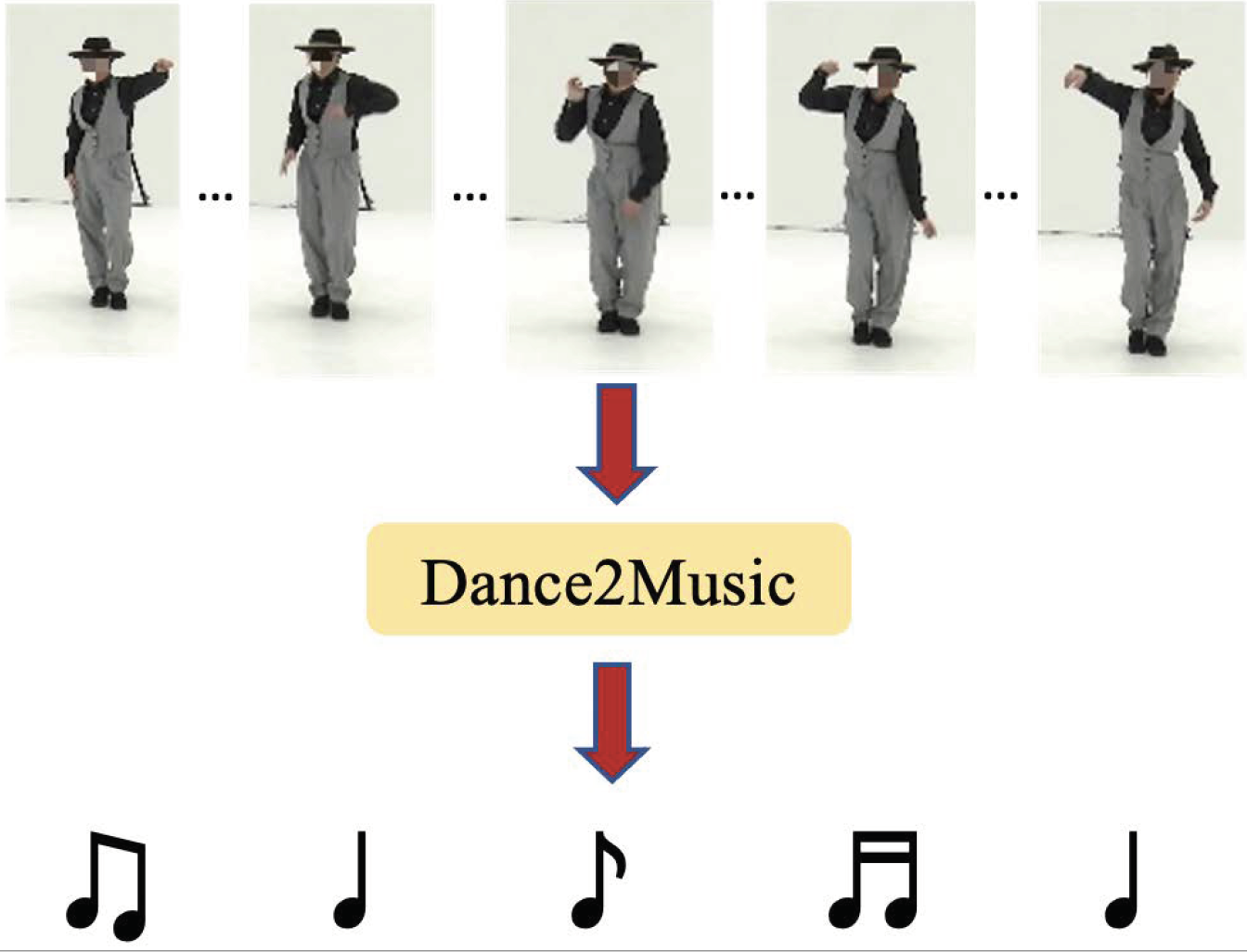

Proposed an approach to generate music conditioned on dance in real-time.

|

|

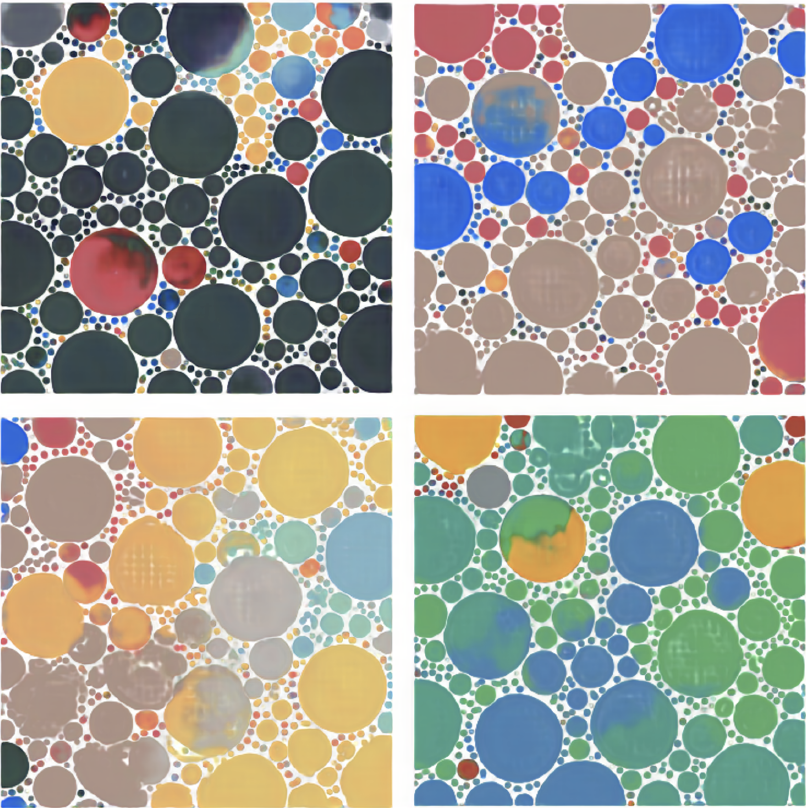

Proposed a new hybrid genre of art: neuro-symbolic generative art (NSG).

|

|

Explored the benefits of adversarial training for neural networks.

|

|

Developed a multi-conditional image generation pipeline in an unsupervised way using a hierarchical GAN framework.

|

|

|

|

|

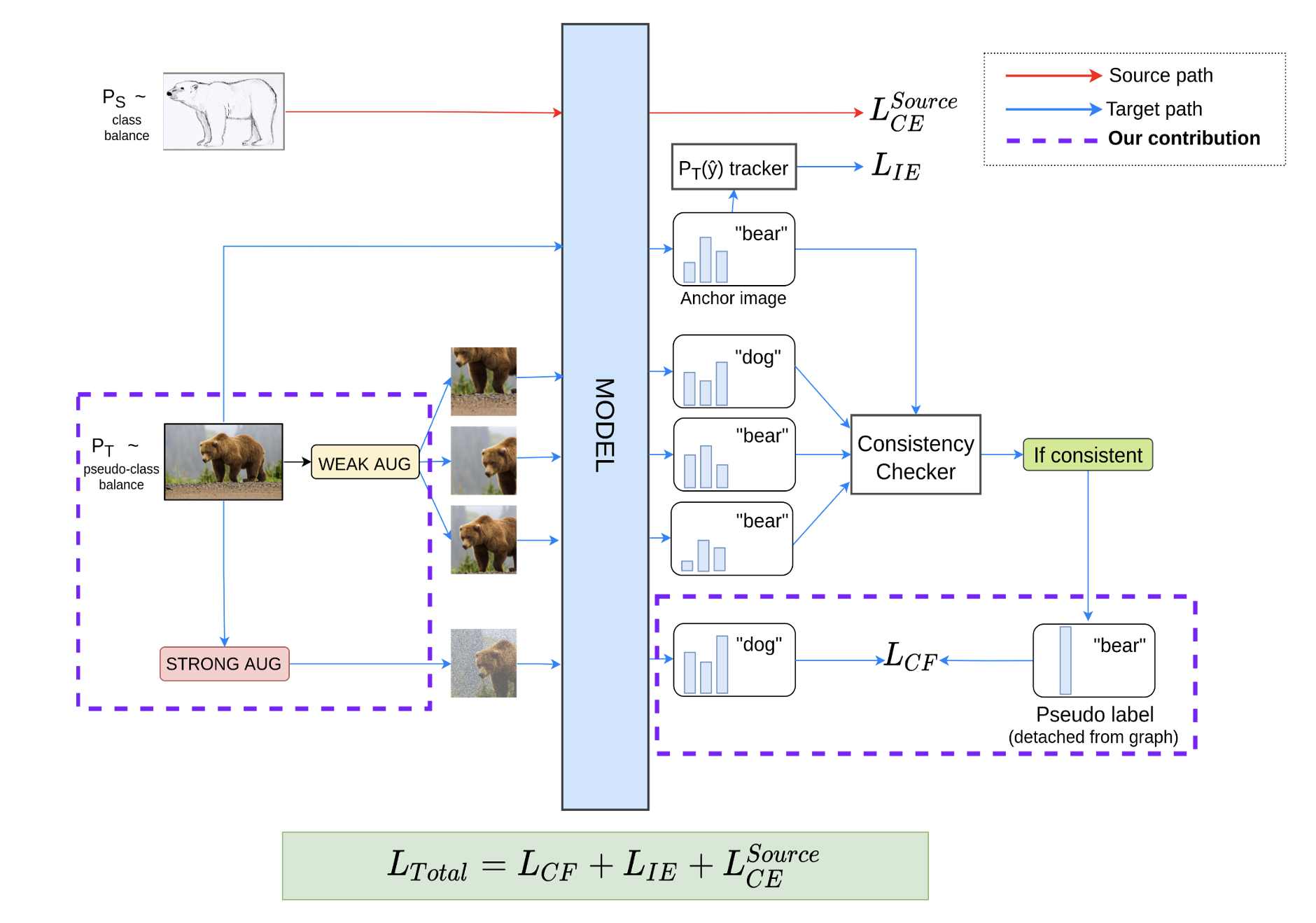

Unsupervised Domain Adaptation Used FixMatch consistency to achieve 4% improvement over the state-of-the-art approach for Unsupervised Domain Adaptation from SVHN to MNIST. |

|

Sentiment Analysis using Deep Learning Applied deep learning to perform sentiment analysis over different Indian languages.

|

|

Knowldge Extraction Analyzed UCI Student Performance dataset and classified the student grades using several models such as KNN, Decision trees and SVM.

|

|

Textual Search Engine Implemented sentence tokenization, normalization, building of inverted index and processing of wild-card queries for document retrieval on Reuters Corpus. |

|

|

|

|

MS Research Award Awarded the College of Computing MS Research Award at Georgia Tech. |

|

Adobe MAX SNEAKS One of the 11 presenters Adobe wide to present my work on multi-conditional image generation at Adobe MAX SNEAKS, 2019 - Video link. |

|

Google Code Jam Achieved a global rank of 27 in "Code Jam to I/O for Women" and got invited to attend Google I/O, 2018. |

|

|

|

|

Applied Research Scientist at Adobe, San Jose | May 2023 - Present Working on GenAI - Firefly.

|

|

ML Intern at Adobe, San Jose | June 2022 - August 2022 Worked on real-time generation of temporally consistent videos for face makeup transfer.

|

|

Graduate Research Assistant, Visual Intelligence Lab, Georgia Tech | Aug 2021 - May 2023 Working under the supervision of Prof. Devi Parikh and Prof. Dhruv Batra.

|

|

Machine Learning Software Development Engineer-2 at Adobe, India | July 2018-August 2021 Worked on the chatbot framework for Adobe Messaging platform from scratch, starting with Microsoft LUIS and Rasa, and moving on to designing in-house multi-lingual intent classifier by utilizing embedding from Google's Universal Sentence Encoder (USE) model. The chatbot is serving ~ 20,000 customers daily.

|

|

|

|

|

Master of Science in Computer Science Georgia Institute of Technology, Atlanta | Specialization in Machine Learning.

|

|

Bachelor Of Engineering (Hons.) in Computer Science.

BITS Pilani | Aug 2014 - July 2018. |

|

|